I ran a test last month that made me uncomfortable. Actually, uncomfortable isn’t the right word… it made me question everything I thought I knew about pricing web development work. I hired an experienced SEO auditor to analyze a client’s website (technical issues, content gaps, optimization opportunities… the whole package). Took them 3-4 hours of focused work.

Then I fed the exact same site into Claude with the same instructions. Ten minutes… that’s how long it took the AI to deliver a more comprehensive analysis. Inconsistent schema markup across similar pages that the human missed. Subtle keyword cannibalization patterns buried deep in the site structure. The human auditor brought incredible strategic thinking to the table, but for comprehensive technical crawling and pattern recognition across hundreds of pages, the AI was more thorough… and 36 times faster.

That’s when I realized we need to talk about what’s actually happening in web development right now. Most clients don’t understand the gap between what they’re being quoted and what the work actually requires anymore. I’m starting to see a pattern emerge across my client base… the disconnect is massive.

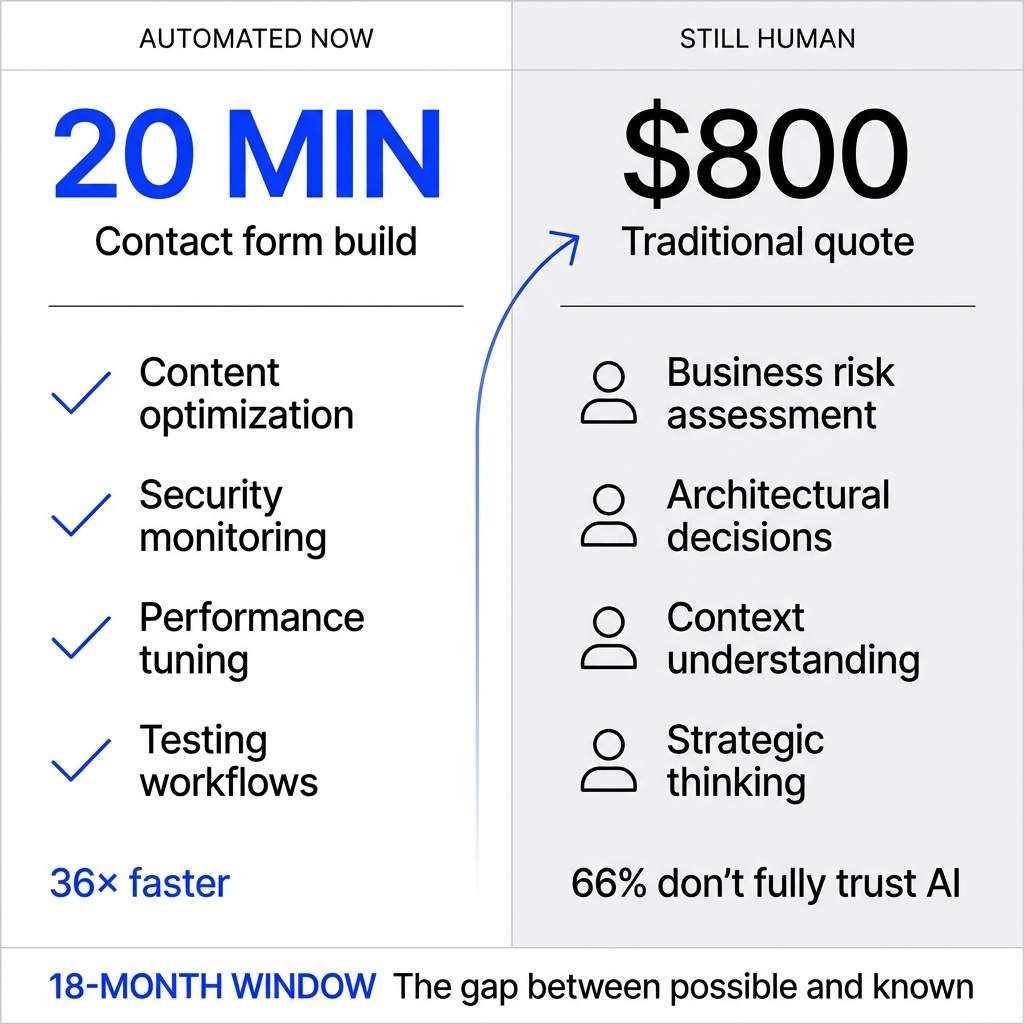

The $800 Contact Form

Here’s a real example I watched unfold: A competitive developer quoted a client $800 for a “complex” form with validation and email integration. Two full days of work, he claimed. I built the exact same thing in 20 minutes using ChatGPT and a form builder API (every custom validation rule, every automated email response, everything). The AI even generated cleaner code than I’d seen from that developer’s previous projects.

Look, I’m not sharing this to trash developers… I’m sharing it because the data is undeniable: developers report saving 30-60% of their time using AI tools. So when someone quotes you 10 hours for basic implementation work, you need to understand what you’re actually paying for. Now here’s where it gets interesting… that same research shows experienced developers on complex work actually take 19% longer when using AI tools.

The automation crushes routine tasks but breaks down completely when you need deep architectural thinking. This distinction matters… a lot. It’s the difference between work that’s genuinely hard and work that just looks hard from the outside.

What’s Actually Automatable Right Now

I’ve spent 30+ years in this industry watching technology shifts reshape everything. This one’s different… the gap between what’s technically possible and what most clients understand is absolutely massive. And that gap is creating some uncomfortable truths I’m just starting to unpack. Here’s what AI handles well in 2025 (based on what I’m observing across my client work)…

Content optimization that used to require specialist knowledge is now literally one-click… automated meta tags and titles save hours of manual work that agencies used to bill for. I’ve personally watched tools generate complete, functional websites in under 40 seconds. Forty seconds… for work that used to take days.

Security monitoring that once cost $150+ per hour is being automated at scale (about 50% of businesses are expected to use AI in cybersecurity and fraud management in 2025). I ran a 30-day AI security experiment on a client site where the system flagged what looked like completely normal activity to me… just someone using legitimate credentials to access different parts of the network. Turns out it was lateral movement… someone methodically mapping out the entire network infrastructure over several days. Would’ve taken me weeks to piece that pattern together manually (if I even caught it at all).

Performance tuning that traditionally required deep technical knowledge is increasingly automated. AI examines your code, identifies redundancies, minimizes HTTP requests, prioritizes essential resources… it automatically resizes and compresses images without quality loss. Work that traditionally cost $100-200 per hour. The numbers back this up: 81% of development teams now use AI in their testing workflows. Testing automation that used to require specialized QA teams is becoming standard infrastructure.

What AI Still Can’t Do

I learned this lesson the expensive way while trying to automate support triage for my own business. Three weeks of constant tweaking… that’s what it took to train the AI to distinguish between urgent customer issues requiring my immediate attention versus routine questions it could handle independently. The hardest part was building enough real examples and edge cases so it wouldn’t either escalate everything as urgent or miss genuinely critical issues. That failure taught me where the boundaries actually are.

Here’s what still absolutely requires human judgment: understanding business risk, knowing which bugs actually matter to users versus which are cosmetic, making calls when test results contradict each other. AI handles the repetitive grunt work that burns out good engineers (test generation, flake detection, self-healing selectors). But it can’t understand nuanced context the way experienced developers can… not yet anyway.

I’m also still trying to automate social image generation for different devices… there’s not a good solution yet. It’s emerging technology that’ll hopefully get there, but right now it’s still manual work. These gaps are worth understanding because they show you where the real complexity lives.

The Trust Problem

Here’s the dirty secret: 46% of developers say they don’t fully trust AI results. Only 33% trust them, and just 3% highly trust AI-generated outputs. Which explains why some developers are slow to adopt these tools… or more importantly, why they’re hesitant to disclose when they’re using them.

The core problem: 66% of developers say AI gives results that aren’t fully correct. The code looks right, passes initial review, then fails spectacularly during testing. So developers spend extra time checking and editing AI output, which cancels out the time savings they expected. Some of this is a prompting problem… we’re all still learning to communicate effectively with these tools.

This is the uncomfortable gap between AI hype and actual reality. AI is genuinely powerful for specific, well-defined tasks, but it’s not magic… and it’s definitely not replacing the need for experienced technical judgment. Understanding this gap is how you avoid getting burned by overpromises.

The 18-Month Window

Here’s what keeps me up at night: the next 18 months will separate winners from everyone else in this space. This isn’t arbitrary timing… AI models are approaching the threshold where they can maintain full conversation context across multiple sessions and truly personalize at scale. Companies that have these systems integrated by then will have accumulated the data flywheel and user behavior patterns that become nearly impossible for competitors to replicate.

After that point, you’re permanently behind on understanding how your users actually interact with AI-mediated experiences. That learning curve becomes your only real competitive moat. We’ve already seen massive disruption… in just the last 14 months, Google pushed three major updates that fundamentally changed how 40% of all searches work. My 18-month projection is educated speculation, but we should see a complete rewrite of search functionality within that window.

The SEO Shift Nobody’s Ready For

AI search engines like ChatGPT, Perplexity, and Google’s AI Overviews don’t just crawl and rank pages anymore (that’s old paradigm thinking). They synthesize information and cite sources based on relevance, clarity, and contextual authority. Traditional SEO obsessed over keywords and backlinks… AI search prioritizes content that directly answers questions with structured, authoritative information that’s easy to extract and cite.

I caught this shift mid-2023 when I started testing client sites in ChatGPT and Perplexity just to see what would happen. Sites that ranked beautifully in traditional search weren’t necessarily getting cited by AI… sometimes they weren’t mentioned at all. The single most important thing you should start doing right now: create “citation-worthy” content (clear, well-sourced answers to specific questions in your niche that an AI would confidently reference as authoritative). Stop writing keyword-stuffed pages designed to game traditional algorithms.

If you’re not already adapting your SEO and marketing strategy to these new AI search patterns, you’re setting yourself up to play catch-up for the next five to seven years. This shift is hard but workable if you start making changes now.

What Actually Matters: The Three-Variable Framework

When I evaluate which AI capabilities actually matter for a specific site, I use a three-variable framework I’ve developed over the last year. Content velocity tells me whether they need generation tools or optimization tools (a news site publishing 50 articles daily has radically different needs than a SaaS company putting out two pieces per month). User intent complexity shows whether I need semantic analysis and entity extraction, or if basic keyword targeting still delivers results. Conversion pathway length determines whether I need sophisticated personalization engines or simpler recommendation systems (an e-commerce site with quick purchase decisions doesn’t need the same AI infrastructure as a B2B platform where buyers research for months before converting).

This framework helps me avoid the dangerous trap of “more AI is always better.” The right approach isn’t more AI… it’s strategic AI matched precisely to site type and business model. It depends on what you’re actually trying to optimize.

The Directory System That Used to Cost $80K

Some of the better AI agents can now build custom WordPress systems and directory platforms that used to cost $20,000 to $80,000… in five or six days. I’m watching this happen in real time with actual client projects. Custom features that required 800 to 1,000 development hours are being compressed into week-long builds. Payment gateway integration that used to take 30 to 50 hours, seat assignment features that required 40 to 60 development hours… all compressed. This isn’t theoretical future-casting… this is happening right now with clients who understand how to leverage these tools effectively.

What This Means for You

Let me be clear: I’m not anti-developer. I’m pro-transparency about what’s genuinely difficult versus what’s now automated. When you get a quote for web work, here’s what you should understand: which parts involve AI-assisted development, what’s the time breakdown between automated tasks and custom problem-solving, what specific challenges require human expertise versus routine implementation. Good developers will be completely honest about this… they’ll explain precisely where AI accelerates their work and where it doesn’t, and they’ll show you the actual value they’re adding beyond what automation provides.

The developers who resist or dodge this conversation are the ones who should worry you. The gap between what’s technically possible and what you’re being charged for is only going to widen. The clients who understand this difference are the ones who’ll build faster, cheaper, and smarter over the next 18 months… while everyone else is still trying to figure out what happened.

The technology isn’t your enemy… opacity is. Understanding where automation helps and where human expertise still matters is how you make smart decisions about what you’re actually paying for.